Convolution semantic distance

Semantic comparison of two text fragments needs a measure of their proximity as a number indicating the rate of proximity on the scale 0.0 to 1.0, assuming 0.0 for completely different and 1.0 for identical fragments. One simple and popular measure is a vectors cosine distance, when two text fragments are converted into vectors, where each component is a count for a particular word. The result is 1.0 for identical and 0.0 for orthogonal vectors. The disadvantage of cosine distance is so called bag-of-words approach. It simply ignores words' order. The order in words may not be that important for paragraphs but important for large documents. Documents may convey different information in their different parts or paragraphs. We can't expect that words SINGULAR and VALUE and DECOMPOSITION necessarily means that document speaks about SINGULAR VALUE DECOMPOSITION if these words not follow each other in the document in that exact order.There are some known attempts to include the words' order into computed distance. Let us mention US patent 8,401,841, which suggests to record all word pairs within each paragraph and compare paragraph to paragraph by counting common pairs. If the same words in two compared documents are spread apart, the documents will not be considered as semantically close. That approach is much better than a bag-of-words for the whole document but it needs some sort of long preprocessing prior to usage of the query. For the quick search the whole collection of documents need to be processed, all pairs needs to be added to the database along with unique paragraph index. When query is processed, the word pairs are made for the query as well in the same way and paragraphs where these pairs occurred can be quickly extracted from the database. The documents can be sorted according to the number of discovered shared pairs.

We do not criticize this approach, because it is effective for the large collections, but offer another method that may cover cases for small collections. Assume we have only few hundred documents and need to sort them according to semantic similarity to a given document. We need some quick and simple routine that do that without burden of creation of a database. Such method is explained below.

Let us consider as an example two following paragraphs:

- The quick brown fox jumps over the lazy dog

- The fur coat is made of the quick brown fox that failed to jump over the lazy dog

- quick brown fox jump lazi dog

- fur coat quick brown fox fail jump lazi dog

- 0 1 2 3 4 5

- 6 7 0 1 2 8 3 4 5

| ... | 6 | 7 | 0 | 1 | 2 | 8 | 3 | 4 | 5 |

| 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 |

The distance is computed as sums of squares of the lengths of diagonal chains of ones divided by the number of elements in the matrix, that means [32 + 32] / [ 6 * 9 ] = 0.33.

The geometric interpretation is making square fragments for each non-zero diagonal chains and compute ratio as number of ones in the matrix divided by total number of elements.

| ... | 6 | 7 | 0 | 1 | 2 | 8 | 3 | 4 | 5 |

| 0 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 1 | 1 | 1 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 |

| 4 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 1 | 1 |

Obviously, if paragraphs do not have common words the measure will be zero, for identical paragraphs it will be one. Even if documents have common words the distance also depends on how long are the similar word chains.

The building of such matrices for large documents will be computationally expensive. We may need to compare not just paragraphs but documents with several thousand words in each. With certain approximation we can achieve the same result by simple convolution and comparison of the elements. To achieve that we slide vectors along each other in the manner shown below and count common elements on each step.

- 0 1 2 3 4 5

- - - - - - 6 7 0 1 2 8 3 4 5

- 0 1 2 3 4 5

- - - - - 6 7 0 1 2 8 3 4 5

- - - 0 1 2 3 4 5

- 6 7 0 1 2 8 3 4 5

- - - - - - - - - 0 1 2 3 4 5

- 6 7 0 1 2 8 3 4 5

The computational part is very quick. For two vectors of sizes m and n the number of necessary comparisons is product m * n and we compare the integers, which is quick processor operation.

The function that computes convolution semantic distance for two vectors is shown below.

public double ConvolutionProximity(int[] x, int[] y)

{

if (x == null) return 0.0;

if (y == null) return 0.0;

if (x.Length <= 0) return 0.0;

if (y.Length <= 0) return 0.0;

int max = x.Length;

if (y.Length > max) max = y.Length;

double totalMatches = 0.0;

bool bFlag = false;

int count = 0;

for (int j = y.Length - 1; j >= 0; --j)

{

int i = 0;

for (int k = 0; k < max; ++k)

{

int ii = i + k;

int jj = j + k;

if (ii >= x.Length) break;

if (jj >= y.Length) break;

if (x[ii] == y[jj])

{

++count;

bFlag = true;

}

else

{

if (bFlag == true)

{

if (count > 0)

totalMatches += (double)(count * count);

bFlag = false;

count = 0;

}

}

}

if (bFlag == true)

{

if (count > 0)

totalMatches += (double)(count * count);

bFlag = false;

count = 0;

}

}

count = 0;

bFlag = false;

for (int i = 1; i < x.Length; ++i)

{

int j = 0;

for (int k = j; k < max; ++k)

{

int ii = i + k;

int jj = j + k;

if (ii >= x.Length) break;

if (jj >= y.Length) break;

if (x[ii] == y[jj])

{

++count;

bFlag = true;

}

else

{

if (bFlag == true)

{

if (count > 0)

totalMatches += (double)(count * count);

bFlag = false;

count = 0;

}

}

}

if (bFlag == true)

{

if (count > 0)

totalMatches += (double)(count * count);

bFlag = false;

count = 0;

}

}

double proximity = totalMatches / ((double)(x.Length * y.Length));

if (proximity > 1.0) proximity = 1.0;

return proximity;

}

The code is written in c# and is optimized for fast execution, but depending on the

programming language and operating system it can still be optimized much further.

In c++ fast navigation along arrays can be done by incrementing pointers.

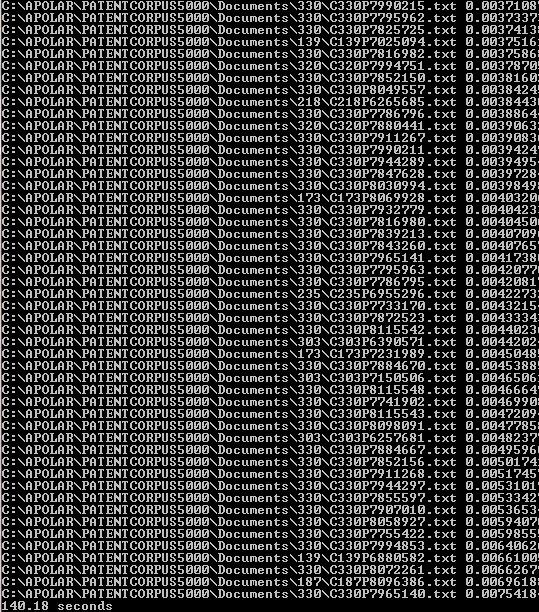

The algorithm was also tested on the large text collection. The data is PATENTCORPUS5000. It contains files with technical details of 5000 patents grouped by 100 in 50 different classes. The definition of one of these classes (class 330) is selected as the first file and was compared to each of 5000 files from collection. The result is sorted from bottom to top according to computed semantic distance. In the screenshot bellow subfolder \330\ in the path of the document indicates the correctly identified documents.

The test may be considered as natural language processing of realistic data. The class definition written by patent expert was compared to technical description of the patents written by different people - patent attorneys. The processing time was 140 seconds for 155 MB of data in a single threaded application. Obviously, the processing can be done in a concurrent way.

October 7, 2014.